The 9th Street Journal, a Durham news publication published by students in the Dewitt Wallace Center for Media and Democracy, is experimenting with AI-generated news content.

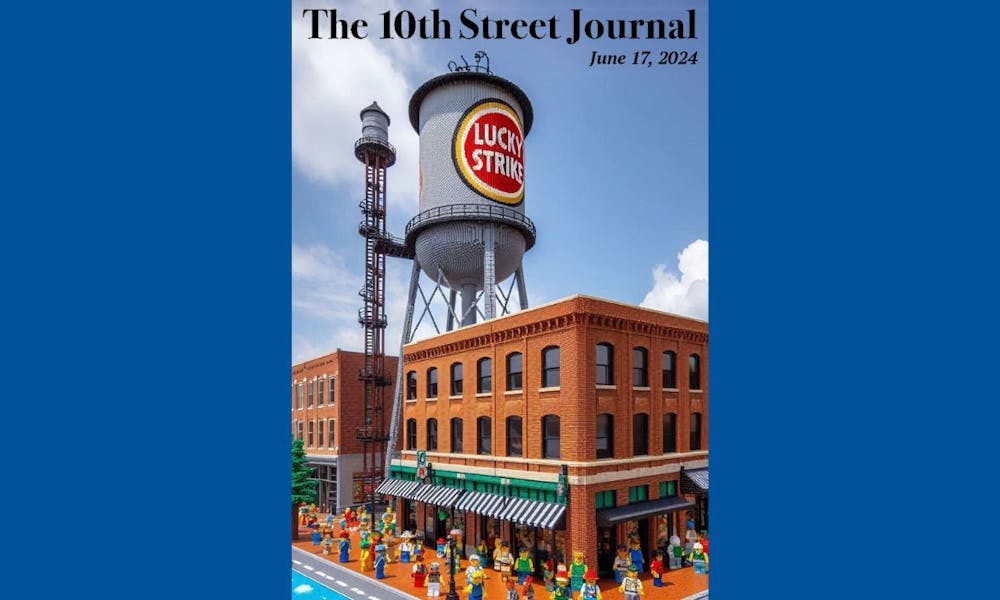

The project, dubbed The 10th Street Journal, employs generative AI to produce stories from “news releases or other official sources” about community events, government actions and meetings. The effort — which labels all content generated by AI — seeks to cover stories that local newspapers lack the resources to report on.

“The generative AI model lets us get those stories out to our readers, and we think that the readers value that sort of information,” said Joel Luther, an associate in research at the Dewitt Wallace Center and a 10th Street editor. “But it [was] not something that we had the time or the resources to do before starting this project.”

Luther believes that AI can assist in alleviating “news deserts,” or areas that lack a newspaper providing local coverage. Currently, more than half of U.S. counties have only one local news outlet or none at all.

According to Asa Royal, a research associate at the DeWitt Wallace Center, The Ninth Street Journal has reporters who investigate and cover the stories which require “accountability,” while 10th Street is used to provide information on “day-to-day” news items.

Luther explained that 10th Street labels all articles generated by AI and discloses that all stories are reviewed by two human editors “for factuality and style” before publication.

Philip Napoli, James R. Shepley distinguished professor of public policy and director of the DeWitt Wallace Center, believes that failing to disclose what content is AI-generated or disguising AI-generated journalism as human-made poses a “fundamental breach of ethics.”

Luther echoed this sentiment, emphasizing that there needs to be “a concerted effort on the part of newsrooms to be transparent, and also a concerted effort on the part of readers to demand transparency.”

He added that AI tools do not have enough capabilities to merit publication without human review. Luther shared that in a story he reviewed, the generative AI model input a “long URL” as the location of a library instead of its actual address.

“The limitations of the process is that this AI model is not going out and searching the internet. It's not doing additional reporting. It's not able to pull in factual information from sources that are not directly input into it,” Luther said. “So it's limited in that way.”

Napoli views the human review stage as important because of the potential damage that can be caused by inaccuracies in AI-generated news stories. While a correction can be issued, the updated information may fail to reach audiences in a timely manner.

When considering potential flaws in AI models generally, he pointed out that the nature of AI training data may “replicate or exacerbate any structural inequalities that already exist.”

“I worry that there are entities out there … that see the value in putting AI at the center of journalism and humans at the periphery,” Napoli added. “That's not a future of journalism that … I could embrace at all.”

Luther emphasized that 10th Street does not intend to replace student journalism and does not “envision AI replacing traditional journalism anytime soon.” Royal elaborated on this sentiment, describing that AI could allow media outlets to produce news “very cheaply.”

As a Durham resident, Royal said he has grown to rely on 10th Street to fill gaps he sees in local daily news.

He shared that his main source of local information was previously the “r/bullcity” subreddit, which he described as “an aggregator” of information that other community members had discovered. Now, Royal said The 10th Street Journal provides him with an additional way to stay up-to-date on Durham news.

Luther pointed out that the presence of a “very communicative” government in Durham is important for the 10th Street model to function.

“A lot of the things that you'll see on The 10th Street Journal are coming from press releases from the government or from other local organizations,” he said. “I would caution that in smaller areas and more rural areas where there might not be as much communication from official sources, it might be harder to adapt to projects like this.”

Another area where generative AI may support journalism is by empowering more reporters with the tools of data science.

“The reality is, there's very few people who are fluent in both data science and journalism,” Napoli said. “So might AI provide a way in which some of the shortcomings of the journalists trying to operate as data scientists could be addressed?”

Get The Chronicle straight to your inbox

Sign up for our weekly newsletter. Cancel at any time.

Luther agreed, stating that AI will supplement journalists with data analysis skills, allowing them to access “treasure troves of data” that they would not be able to use otherwise.

He emphasized, however, that “fundamentally, journalism is human” and requires “human connection.”

Ishita Vaid is a Trinity junior and a senior editor of The Chronicle's 120th volume.